Artificial Intelligence (AI) has swiftly transitioned from a speculative concept to a pivotal component of modern technology. As AI systems become increasingly integrated into various aspects of society, the importance of ethical AI cannot be overstated. Ethical AI refers to the development and deployment of AI technologies in a manner that is responsible, fair, and aligned with human values. This burgeoning field aims to ensure that AI systems are designed and used in ways that respect individual rights and societal norms.

The significance of ethical AI in today’s technological landscape is profound. The rapid advancement of AI technologies presents both remarkable opportunities and formidable challenges. On one hand, AI holds the potential to revolutionize industries, enhance efficiency, and solve complex problems. On the other hand, if not carefully managed, AI can exacerbate existing inequalities, infringe on privacy, and lead to unintended harmful outcomes. This duality underscores the necessity for a robust ethical framework to guide AI development and application.

Neglecting ethical considerations in AI development can have far-reaching consequences. For instance, biased algorithms can perpetuate and even amplify societal prejudices, leading to unfair treatment of certain groups. Lack of transparency in AI decision-making processes can erode trust and accountability, making it difficult for individuals to understand or challenge decisions that affect their lives. Moreover, inadequate attention to privacy can result in unauthorized data access and misuse, posing significant risks to personal and organizational security.

To address these challenges, ethical AI is built on several key principles. Fairness ensures that AI systems do not discriminate against individuals or groups. Transparency demands that the workings of AI systems are understandable and accessible to stakeholders. Accountability holds developers and organizations responsible for the outcomes of their AI technologies. Privacy safeguards the sensitive information that AI systems often handle, ensuring that data is protected and used appropriately. Together, these principles form the cornerstone of ethical AI, guiding the development of technologies that are not only innovative but also aligned with societal values and human rights.

Challenges in Implementing Ethical AI

The integration of ethical principles into AI systems presents a myriad of challenges, starting with the complexity of defining and standardizing ethical guidelines. Differing cultural, legal, and social norms across the globe make it difficult to develop a universally accepted framework for ethical AI. This lack of standardization complicates the creation of AI systems that can operate seamlessly and fairly across diverse environments.

Technically, embedding ethical guidelines into AI systems involves significant hurdles. AI developers must design algorithms that not only perform tasks efficiently but also adhere to ethical considerations, such as fairness, accountability, and transparency. This is easier said than done; ethical principles are often abstract and context-dependent, making their translation into precise, actionable code a formidable task. Additionally, the dynamic nature of AI systems, which learn and evolve over time, adds another layer of complexity. Ensuring that an AI system remains ethical throughout its lifecycle requires ongoing monitoring and adjustments, which can be resource-intensive.

Conflicts between ethical considerations and business goals further complicate the implementation of ethical AI. Companies may face pressure to prioritize profitability and performance over ethical guidelines, leading to potential compromises in areas such as data privacy and algorithmic fairness. For instance, an AI system designed to maximize user engagement might inadvertently promote biased content, compromising fairness in favor of business metrics. Balancing corporate interests with ethical imperatives is a delicate act that requires robust governance frameworks and a strong commitment to ethical AI from leadership.

Real-world examples highlight the ethical challenges in AI applications. A notable case is the bias found in predictive policing algorithms, which have been criticized for disproportionately targeting minority communities. Another example is the privacy concerns raised by AI-driven facial recognition technologies, which can lead to unauthorized surveillance and data breaches. These instances underscore the importance of carefully considering ethical implications in the development and deployment of AI systems.

Strategies for Balancing Innovation and Responsibility

In the rapidly evolving landscape of artificial intelligence, maintaining an equilibrium between innovation and ethical responsibility is paramount. One of the primary strategies to achieve this balance is the implementation of ethical design frameworks. These frameworks serve as guiding principles that ensure AI systems are developed with a focus on fairness, transparency, and accountability. By incorporating ethical considerations from the outset, developers can mitigate potential biases, ensure data privacy, and enhance the overall trustworthiness of AI technologies.

Cross-disciplinary collaboration is another critical approach to fostering ethical AI. By involving experts from diverse fields such as computer science, ethics, law, and social sciences, organizations can gain a comprehensive understanding of the multifaceted implications of AI. This collaborative effort enables the identification and resolution of ethical dilemmas that may not be apparent from a single disciplinary perspective, thus promoting more robust and responsible AI solutions.

Robust governance structures play a crucial role in maintaining ethical integrity in AI systems. Establishing clear policies and procedures for the development, deployment, and monitoring of AI technologies ensures that ethical standards are consistently upheld. This includes the creation of ethics review boards, the implementation of regular audits, and the establishment of accountability mechanisms to address any ethical breaches. Such governance structures are essential for fostering a culture of responsibility and trust within organizations.

The role of regulatory bodies and industry standards cannot be overstated in the context of ethical AI. Regulatory agencies provide the necessary oversight to ensure that AI innovations adhere to established ethical guidelines and legal requirements. Industry standards, on the other hand, offer a benchmark for best practices, enabling organizations to align their AI initiatives with broader ethical and societal expectations. By adhering to these regulations and standards, companies can navigate the complexities of AI development while maintaining ethical integrity.

Lastly, continuous monitoring and evaluation of AI systems are imperative for sustaining ethical responsibility. As AI technologies evolve, ongoing assessments are needed to identify and address emerging ethical challenges. This involves regular performance evaluations, impact assessments, and stakeholder feedback mechanisms. Through continuous monitoring, organizations can adapt their AI systems to changing ethical standards and societal expectations, ensuring long-term responsibility and sustainability in AI innovation.

Case Studies and Future Directions

As artificial intelligence continues to advance, it is crucial to examine real-world examples of how organizations have successfully integrated ethical principles into their AI technologies. One such case is that of Microsoft with its AI for Good initiative. This program, which focuses on using AI to address societal challenges such as environmental sustainability, healthcare, and accessibility, exemplifies how corporate responsibility can align with technological innovation. By adhering to ethical guidelines and stakeholder engagement, Microsoft has set a benchmark for responsible AI development.

Another notable example is the collaboration between IBM and the City of New York. Together, they developed an AI-driven system to improve public safety while ensuring privacy protections. The system uses machine learning to predict crime hotspots without infringing on individual rights. This is achieved through rigorous data anonymization and compliance with privacy regulations, demonstrating the feasibility of deploying ethical AI in public administration.

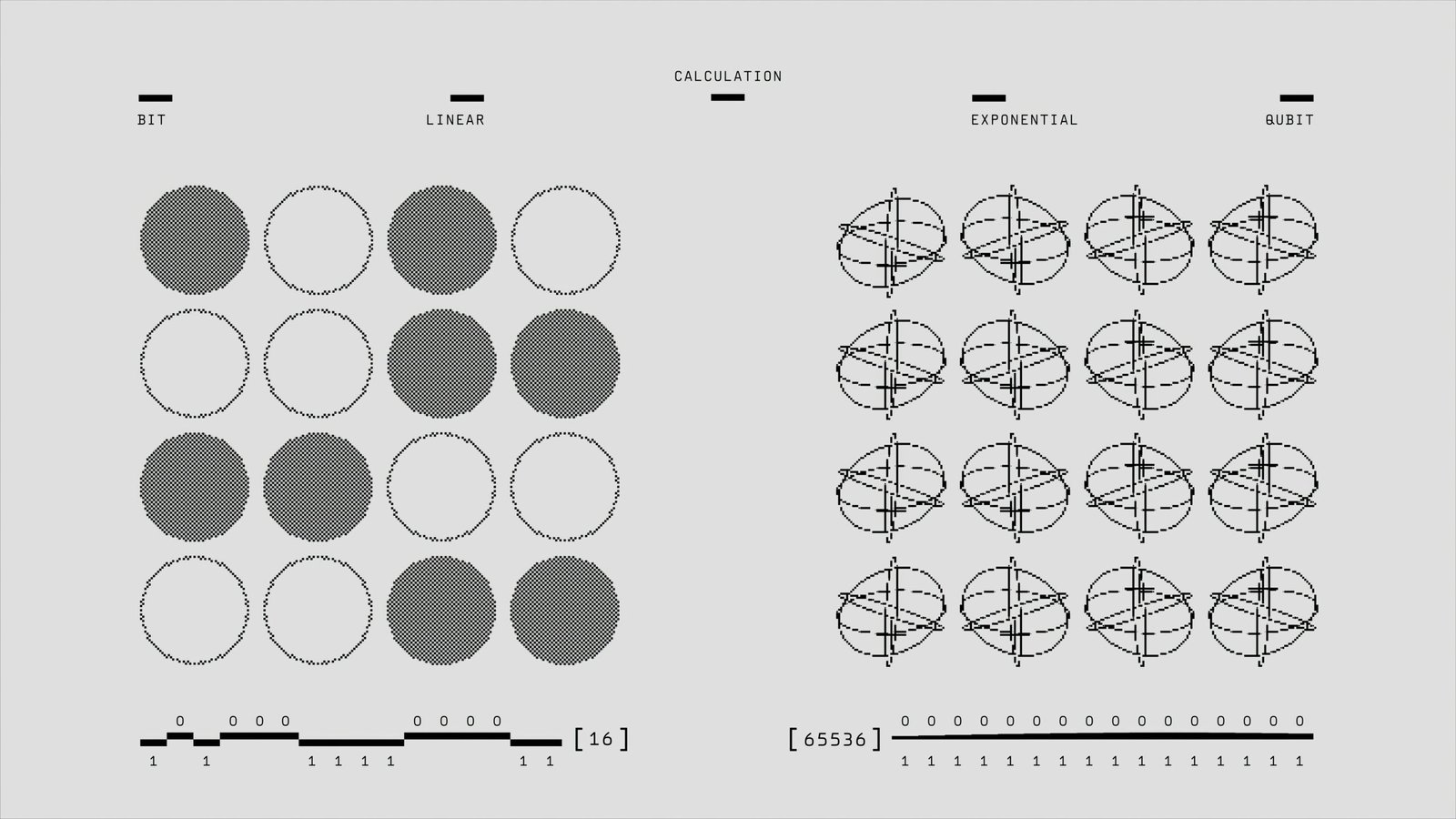

Looking ahead, the future of ethical AI will be shaped by emerging technologies and an evolving regulatory landscape. Quantum computing, for instance, promises to revolutionize AI capabilities but also presents new ethical dilemmas regarding data security and algorithmic transparency. As governance frameworks struggle to keep pace with technological advancements, continuous dialogue and education within the AI community become imperative. Stakeholders must remain engaged to navigate these evolving ethical challenges effectively.

Moreover, the integration of ethical AI principles is not a one-time effort but an ongoing process. Educational institutions, industry leaders, and policymakers must collaborate to develop curricula and training programs that emphasize ethical considerations in AI development. This holistic approach ensures that future AI professionals are equipped to handle the complex ethical issues that will inevitably arise as the technology continues to evolve.

In conclusion, the case studies of Microsoft and IBM illustrate how ethical AI can be implemented successfully, offering valuable lessons for other organizations. The future of ethical AI will depend on the proactive efforts of the entire AI community, from developers to regulators, to foster an environment where innovation and responsibility go hand in hand.