Introduction to Edge Computing

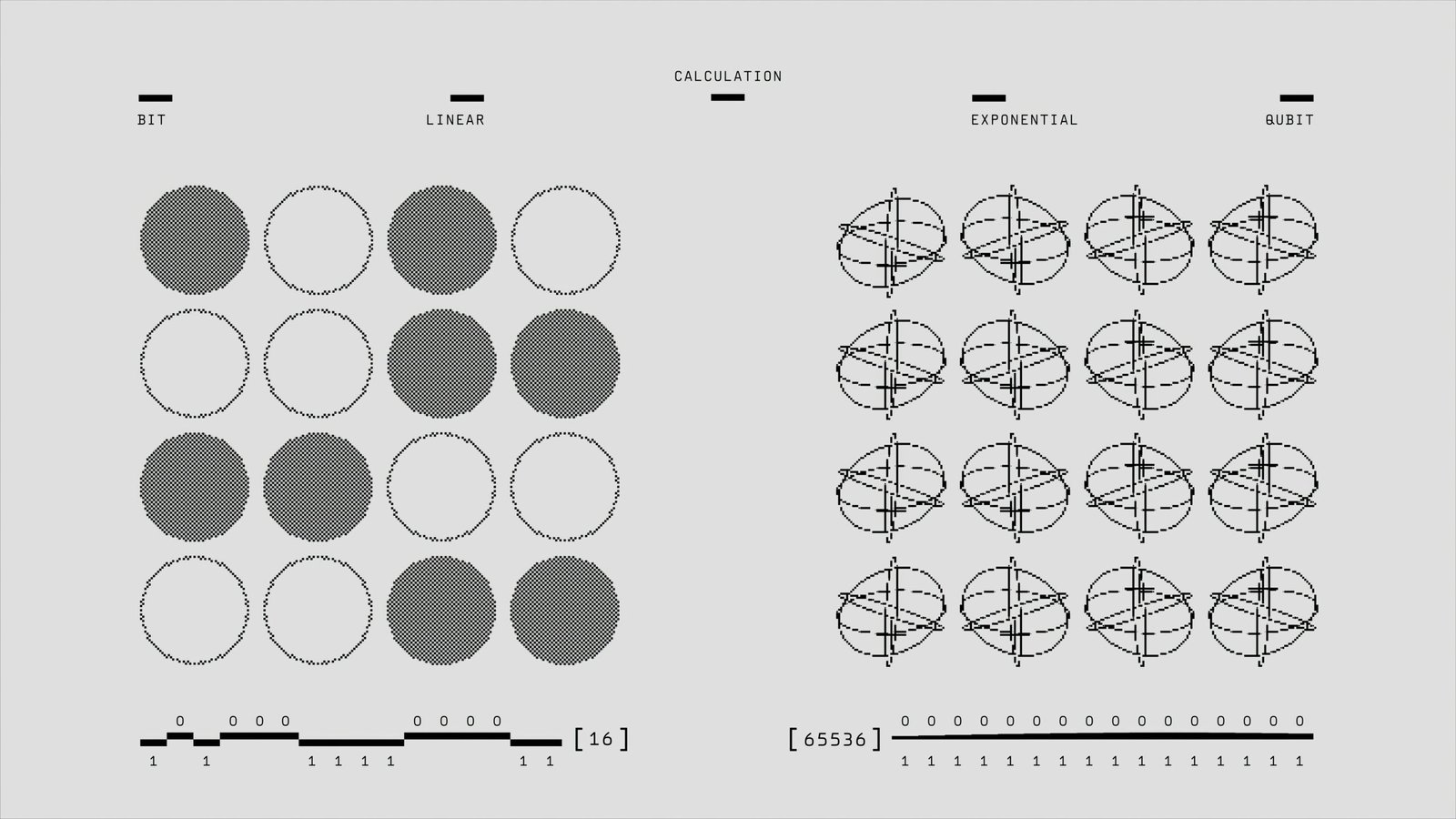

Edge computing represents a paradigm shift in the landscape of cloud technology, addressing the limitations of traditional cloud computing by bringing data processing closer to the data source. Unlike conventional cloud models, where data is transmitted to centralized data centers for processing and storage, edge computing empowers local devices—often called edge nodes—to perform these tasks closer to the generation point. This proximity significantly reduces latency, enhances response times, and optimizes bandwidth usage, making it a critical development in our increasingly connected world.

The driving factors behind adopting edge computing are multifaceted, primarily propelled by the advent and proliferation of Internet of Things (IoT) devices. As the number of connected devices continues to surge, the volume of data they generate also escalates exponentially. Sending all this data to the cloud for processing can result in latency issues and inefficiencies that are unacceptable in applications requiring real-time analytics and decision-making, such as autonomous vehicles and smart grid technology.

Additionally, the edge computing paradigm is gaining traction due to the growing demand for real-time data processing. In scenarios where instantaneous data insights are imperative—such as in predictive maintenance in industrial IoT settings or augmented reality applications—edge computing provides a robust solution by processing data at or near the point of origin. This localized data handling is crucial for industries where even millisecond delays cannot be afforded.

Security and privacy concerns also play pivotal roles in the push towards edge computing. By minimizing the amount of sensitive data transmitted to the cloud, edge computing mitigates risks associated with data breaches during transit and enables more control over data sovereignty, which is especially pertinent in regulated industries like healthcare and finance.

In essence, edge computing extends the capabilities of cloud computing to the network’s periphery, enhancing the efficiency, speed, and security of data processing. Its growing significance is fueled by the technological advancements and pressing demands of modern digital ecosystems, marking a fundamental evolution in cloud architecture.

Benefits of Edge Computing

Edge computing has emerged as a pivotal technology, addressing the limitations of traditional cloud computing by bringing data processing closer to the data source. One of the most significant benefits of edge computing is reduced latency. By processing data at or near the point of origin, latency is minimized, leading to quicker response times. This is particularly crucial for applications where milliseconds can make a difference, such as autonomous vehicles and real-time video analytics.

In addition to reduced latency, edge computing improves overall performance. Processing data locally alleviates the need for continual data transmission to a central cloud, which can be both bandwidth-intensive and time-consuming. By handling data on-site, edge computing reduces network congestion and enables smoother operations. This enhancement is evident in the Industrial Internet of Things (IIoT) where machines and sensors can process data in real time, leading to more efficient manufacturing processes and predictive maintenance.

Another advantage is increased reliability. Edge computing reduces dependence on a central data center, which can be a single point of failure. Distributed data processing ensures that even if the central cloud encounters issues, local operations remain uninterrupted. This resilience is critical in fields such as healthcare, where constant data availability can directly impact patient outcomes.

Moreover, edge computing plays a vital role in conserving bandwidth and reducing the load on central cloud servers. By filtering and pre-processing data locally, only essential information needs to be transmitted to the cloud, conserving valuable bandwidth. This is particularly beneficial for IoT applications where vast amounts of data are generated continuously. For instance, smart cities deploy edge computing to manage and analyze data from numerous sensors and devices, optimizing traffic management and energy use without overwhelming cloud infrastructure.

In summary, the advantages of edge computing—ranging from reduced latency and improved performance to increased reliability and bandwidth conservation—clearly illustrate its transformative potential. By processing data closer to where it is generated, edge computing not only enhances user experiences but also drives operational efficiency across various domains.

Edge computing, serving as an extension of cloud capabilities to localized environments, brings numerous advantages. However, organizations face significant challenges while implementing this technology. Addressing these hurdles is crucial for maximizing its benefits. Below, we discuss prevalent concerns such as security, data privacy, infrastructure costs, and integration complexities, alongside potential solutions.

Security Concerns

One of the principal challenges in edge computing is ensuring robust security. Decentralized nodes present multiple points of vulnerability, increasing the risk of cyberattacks. To mitigate these risks, organizations should implement strong encryption protocols and regularly update their security frameworks. Additionally, adopting a Zero Trust security model can be beneficial. This model operates under the assumption that threats may exist both inside and outside the network, thus consistently verifying devices and user identities.

Data Privacy Issues

Data privacy is another critical issue, especially when dealing with sensitive information at the edge. Ensuring compliance with regulations such as GDPR and CCPA is essential. Organizations can address privacy concerns by adopting data anonymization techniques and utilizing edge data centers for pre-processing and filtering sensitive information before it reaches the cloud. Leveraging technologies like secure multi-party computation can also maintain the privacy of shared data.

Infrastructure Costs

Implementing edge computing infrastructures can entail substantial costs. These costs include hardware investments, software licenses, and ongoing maintenance. To optimize expenses, organizations can explore a hybrid model that balances edge and cloud computing. Leasing edge infrastructure from third-party providers or leveraging modular, scalable solutions can also help manage financial outlays effectively. By conducting a cost-benefit analysis upfront, organizations can make informed decisions on resource allocation.

Integration Complexities

Integrating edge computing with existing IT frameworks poses another challenge. Disparate systems and protocols can complicate interoperability. Adopting standardized communication protocols and leveraging API-driven architectures can facilitate smoother integration. Implementing centralized management platforms helps in overseeing and coordinating all edge devices from a single point, thereby reducing complexity. Engaging with technology partners who have expertise in edge solutions can further streamline the integration process.

Successful implementations of edge computing often involve addressing these challenges strategically. For instance, a leading automotive manufacturer utilized edge computing to enhance real-time vehicle diagnostics. By implementing advanced encryption and anonymization methods, they secured their data pipeline. They also adopted scalable infrastructure solutions to keep costs under control and employed standardized APIs for seamless integration across different systems.

By proactively addressing these challenges with practical solutions, organizations can fully harness the potential of edge computing. This strategic approach not only resolves immediate concerns but also positions them to capitalize on future advancements in this transformative technology.

Guidelines for Implementing Edge Computing

Implementing edge computing effectively requires a structured approach to seamlessly integrate it with existing cloud strategies. Here are actionable steps to facilitate the process:

Selecting the Right Edge Devices: Choose edge devices based on specific use-case requirements. Evaluate the processing power, memory, and connectivity options these devices offer. Consider scalability and compatibility with existing infrastructure to ensure a smooth transition. IoT devices, gateways, and microdata centers are all potential options.

Ensuring Seamless Connectivity: Establish a robust network infrastructure to maintain effective communication between edge and cloud environments. Low-latency, high-bandwidth connectivity is crucial to support real-time data processing. Employ hybrid models where appropriate, combining both edge and cloud resources to optimize performance. Keep in mind the location and distribution of devices to mitigate connectivity issues.

Establishing Robust Security Measures: Implementing stringent security measures is essential to protect edge computing deployments. Use encryption protocols to secure data both at rest and in transit. Additionally, adopt zero-trust security models and regular security audits to identify and mitigate vulnerabilities. Ensure compliance with industry regulations and standards to safeguard sensitive information.

Evaluating and Measuring Performance: Develop a framework to measure the performance of edge computing solutions. Key performance indicators (KPIs) might include latency, throughput, and uptime. Regularly monitor and analyze these metrics to identify improvement areas. Performance evaluation helps in fine-tuning processes and ensuring that the edge implementations meet organizational objectives.

Adapting to Support Next-Generation Applications: Prepare your edge computing solutions to support next-generation applications like AI, machine learning, and autonomous systems. Implement scalable architectures that can handle increasing data volumes and the complexity of advanced applications. Collaboration between cloud and edge teams can foster innovation and bolster the integration of future technologies.