Understanding Data Lakes

Data lakes are centralized repositories designed to store vast amounts of raw data in its native format. Unlike traditional databases, which often require structured data, data lakes embrace a schema-on-read approach. This means that data can be stored without pre-defining its structure, making it apt for handling raw, unstructured, and semi-structured data. This flexibility is one of the primary characteristics of data lakes, allowing them to accommodate varied data types, such as text, images, videos, and log files.

Another key attribute of data lakes is their scalability. Data lakes can grow seamlessly, accommodating increasing volumes of data without significant performance degradation. This scalability is facilitated by distributed storage systems, with popular technologies including Hadoop Distributed File System (HDFS) and cloud-based solutions like Amazon S3. These platforms provide robust frameworks to manage and query large datasets efficiently.

Data lakes are particularly advantageous for big data analytics, as they enable data scientists and analysts to access and explore comprehensive datasets. This capability is vital for machine learning projects, where models often require vast and diverse data to train effectively. Furthermore, the ability to store IoT data streams in their raw form allows for more granular analysis, enhancing predictive maintenance and real-time analytics.

Common use cases for data lakes span several domains. In the healthcare industry, for instance, data lakes can aggregate patient records, clinical trial data, and genomic information for comprehensive research and personalized medicine initiatives. In the finance sector, they can store transaction logs, market data, and customer interactions, aiding in fraud detection and risk management. Retail businesses utilize data lakes to integrate sales data, customer feedback, and social media interactions, providing insights into consumer behavior and enhancing personalized marketing strategies.

Overall, data lakes provide a versatile and scalable solution for organizations looking to harness the power of big data. By leveraging technologies like Hadoop and Amazon S3, businesses can manage diverse data types and support advanced analytics, driving innovation and informed decision-making.

Understanding Data Warehouses

A data warehouse is a centralized repository designed to store structured data from various sources, optimized for query and analysis. Unlike data lakes, which store raw and unprocessed data, data warehouses utilize a schema-on-write approach. This means that the data is cleaned, processed, and structured before being stored, ensuring consistency and facilitating efficient querying.

Key characteristics of data warehouses include their structured data storage and optimized query performance. These systems are built with an architecture that typically involves ETL (Extract, Transform, Load) processes. During the ETL process, data is extracted from multiple sources, transformed into a consistent format, and then loaded into the data warehouse. This ensures data integrity and consistency, making data warehouses highly reliable for analytical purposes.

The architecture of a data warehouse is designed to support complex queries and large-scale data analysis. It often includes components such as a staging area for data transformation, a storage area for processed data, and a presentation layer for accessing the data via queries. This layered approach allows businesses to maintain high data quality and performance.

Data warehouses offer numerous benefits, including the ability to perform complex queries quickly and efficiently. They provide a consistent and reliable view of the data, which is crucial for business intelligence and reporting. Because the data is structured and cleaned before being stored, users can trust the accuracy and integrity of their reports and analyses.

Popular data warehouse solutions include Amazon Redshift, Google BigQuery, and Snowflake. These platforms offer robust tools for managing and analyzing large datasets, making them suitable for a wide range of use cases. For instance, businesses often use data warehouses for business intelligence, enabling detailed reporting and operational analytics. These use cases highlight the importance of data warehouses in turning raw data into valuable insights that drive decision-making and strategic planning.

Comparing Data Lakes and Data Warehouses

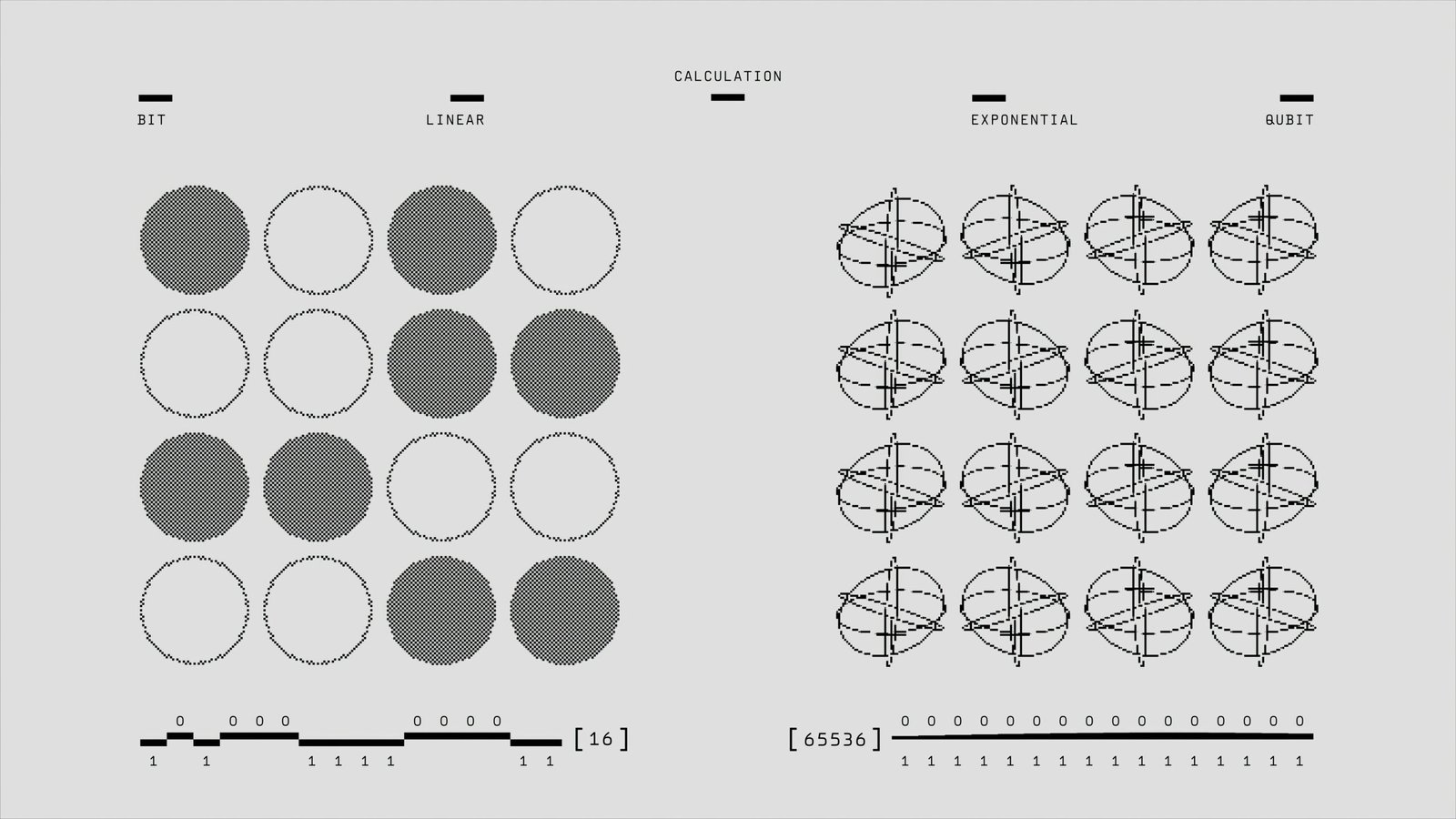

When evaluating data lakes and data warehouses, it’s crucial to understand their fundamental differences. Data lakes are designed to store vast amounts of raw data in its native format until needed. This allows for high scalability and flexibility, making them particularly suitable for unstructured and semi-structured data. On the other hand, data warehouses are optimized for structured data, enforcing schemas and facilitating complex queries and analytics.

In terms of data storage, data lakes use low-cost storage solutions, often leveraging distributed systems like Hadoop. This cost-effectiveness is a significant advantage for organizations dealing with large volumes of diverse data types. Data warehouses, while more expensive, offer high-performance storage with integrated data management tools, which are essential for delivering quick query responses and reliable data integrity.

The processing capabilities of data lakes and data warehouses also differ significantly. Data lakes support a wide range of data processing frameworks, such as MapReduce, Spark, and Flink, providing the flexibility to handle big data analytics and machine learning workloads. Conversely, data warehouses excel in processing high-volume transaction data using SQL-based engines, which are optimal for business intelligence and reporting tasks.

From a performance perspective, data warehouses typically deliver faster query results due to their optimized storage and indexing mechanisms. However, this comes at a higher cost and requires more complex management. Data lakes offer better scalability due to their ability to handle large-scale data with varied structures, making them a preferred choice for scalable and flexible data solutions.

Ease of use is another important consideration. Data warehouses, with their structured nature, provide a user-friendly environment suitable for business analysts and non-technical users. In contrast, data lakes require a higher level of technical expertise to manage and extract valuable insights from the diverse data stored within.

| Aspect | Data Lake | Data Warehouse |

|---|---|---|

| Data Storage | Low-cost, raw data | High-cost, structured data |

| Data Processing | Flexible frameworks (e.g., Spark) | SQL-based engines |

| Performance | Scalable | High-speed queries |

| Ease of Use | Requires technical expertise | User-friendly |

| Cost | Cost-effective | Higher cost |

In some cases, hybrid solutions that incorporate both data lakes and data warehouses can provide the best of both worlds. By integrating the flexibility and scalability of data lakes with the processing power and structured query capabilities of data warehouses, organizations can achieve a balanced approach to data management. Ultimately, the choice between a data lake and a data warehouse depends on the specific needs and goals of the organization. Factors such as the types of data being handled, required analytics capabilities, and budget constraints will play a crucial role in this decision-making process.

Choosing the Right Solution for Your Organization

Choosing between a data lake and a data warehouse depends on your organization’s specific needs and goals. Each solution has unique benefits and limitations, making it crucial to evaluate several key considerations. First and foremost, assess the types of data you need to manage. Data lakes are ideal for handling raw, unstructured data, such as social media feeds, IoT sensor data, and log files. Conversely, data warehouses are optimized for structured data, facilitating advanced analytics and BI reporting.

Next, consider your intended use cases. If your primary goal is to perform detailed historical analysis, generate reports, and support business intelligence, a data warehouse may be the better option. However, for real-time data processing, machine learning, and big data analytics, a data lake is more suitable. Budget constraints are another critical factor; data lakes often present a more cost-effective solution due to their scalability and lower storage costs. However, the complexity of managing a data lake can result in higher operational expenses over time.

Evaluate your existing technology stack to understand compatibility and integration requirements. Data lakes are typically built on cloud platforms like AWS, Azure, or Google Cloud, offering seamless integration with big data tools. On the other hand, traditional data warehouses, such as those built on platforms like Oracle, Microsoft SQL Server, and Teradata, may require more effort to integrate with newer technologies.

A step-by-step framework for decision-making can simplify the selection process. Begin by assessing your current and future data needs. Identify the volume, velocity, and variety of data that your organization handles. Evaluate the technical requirements, including data ingestion, processing, and storage capabilities. Involve stakeholders from different departments to gather insights and ensure alignment with organizational objectives.

Practical advice on implementation includes recognizing potential challenges, such as data governance, security, and compliance issues, and addressing them proactively. For instance, establish robust policies for data quality and access controls. Finally, real-world examples can provide valuable insights. Companies like Netflix and Uber have successfully implemented data lakes to leverage big data for predictive analytics, while financial institutions often prefer data warehouses for regulatory reporting and auditing purposes.